Editor’s Note: ChefConf 2018 ‘How We Did It’ Series

At ChefConf, we presented our Product Vision & Announcements for 2018. During the opening keynotes, attendees were treated to a live demonstration of Chef’s latest developments for Chef Automate, Habitat, InSpec, and the newly launched Chef Workstation. This blog post will be the first of three deep-dive peeks under the hood of each of the on-stage demos we presented. We invite you to follow along and get hands-on with our latest and greatest features to serve as inspiration to take your automation to the next level! Today Nell Shamrell-Harrington, Senior Software Development Engineer on the Habitat team, will take us on a guided tour of using the Habitat Kubernetes Operator to start deploying apps into the Google Kubernetes Engine (GKE). Enjoy!

Habitat and Kubernetes are like peanut butter and jelly. They are both wonderous on their own, but together they become something magical and wholesome. Going into ChefConf, the Habitat team knew how incredible the Kubernetes Habitat integration is and we wanted to make sure that every single attendee left the opening keynote also knowing it. In case you missed it, watch a recording of the demo presentation here.

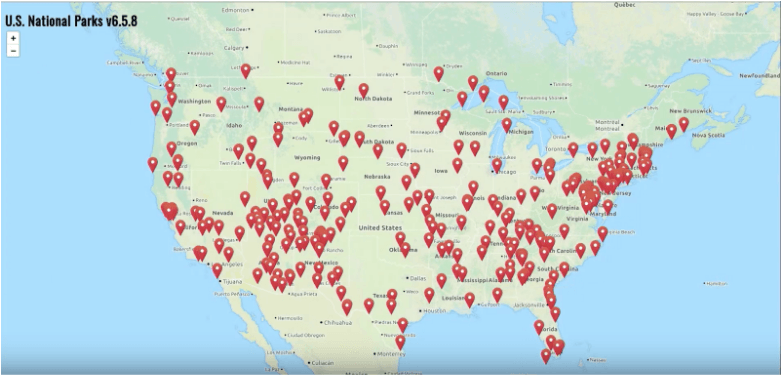

We decided to showcase the Habitat National Parks Demo (initially created by the Chef Customer Facing Teams). This app highlights packaging Java applications with Habitat (many of our Enterprise customers heavily use Java) and running two Habitat services – one for the Java app, and one for the MongoDB database used by the app.

Let’s go through how we made this happen!

Initial Demo Setup

In order to set up the demo environment, we first created a GKE Cluster on Google Cloud. Once that was up, we needed to install the Habitat Kubernetes Operator.

One of the easiest ways to install the Habitat Operator is through cloning the Habitat Kubernetes Operator github repo:

$ git clone https://github.com/habitat-sh/habitat-operator $ cd habitat-operator

That repo includes several example files for deploying the Habitat operator.

GKE required that we use Role Based Access Control (RBAC) authorization. This gives the Habitat operator the permissions it needs manage its required resources in the GKE cluster. In order to deploy the operator to GKE, we used the config files located at examples/rbac

$ ls examples/rbac/ README.md habitat-operator.yml minikube.yml rbac.yml

Then we created RBAC with:

$ kubectl apply -f examples/rbac/rbac.yml

Then we could deploy the operator using the file at examples/rbac/habitat-operator.yml. This file pulls down the Habitat Operator Docker Container Image on Docker Hub and deployed it to our GKE cluster.

$ kubectl apply -f examples/rbac/habitat-operator.yml

We also needed to deploy the Habitat Updater, which we used to watch Builder for updated packages and pulled and updated to those packages automatically (the real magic piece of the demo).

$ git clone git@github.com:habitat-sh/habitat-updater.git $ cd habitat-updater $ kubectl apply -f kubernetes/rbac/rbac.yml $ kubectl apply -f kubernetes/rbac/updater.yml

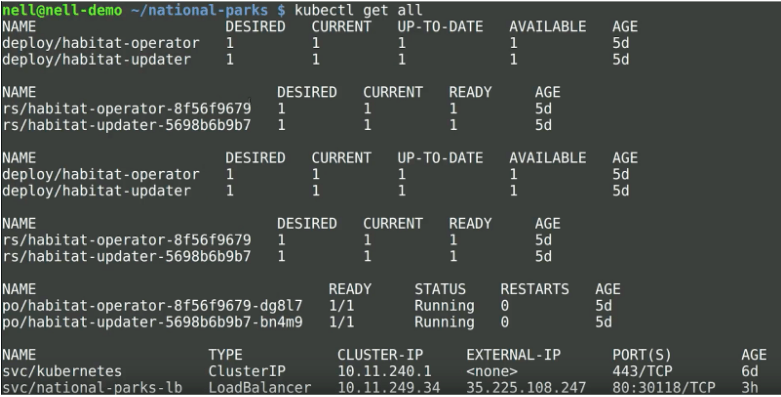

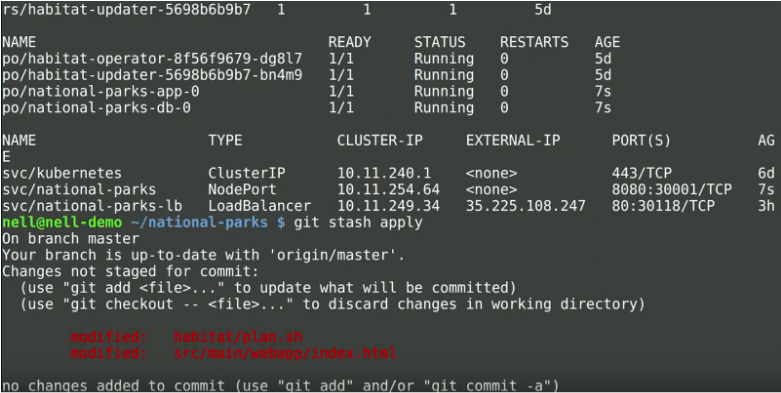

At this point, our GKE cluster looked like this:

And we were ready to get National Parks deployed to the cluster!

Deploying the App

We decided to, for the purposes of the demo, go ahead and fork the National Parks app into our own repository.

Then we added in the necessary Kubernetes config files in the habitat/operator directory

$ git clone git@github.com:habitat-sh/national-parks.git $ cd national-parks $ ls habitat-operator README.md gke-service.yml habitat.yml

The gke-service.yml file contained the config for an external load balancer, we created the load balancer with:

$ kubectl apply -f habitat-operator/gke-service.yml

Then it was time to deploy the actual application with habitat.yml. This file pulled the Mongo DB container image and National Parks App container image from Docker Hub, then created containers from those images.

$ kubectl apply -f habitat-operator/habitat.yml

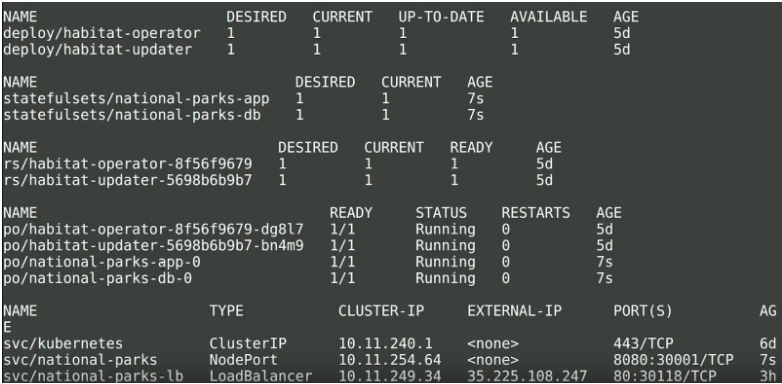

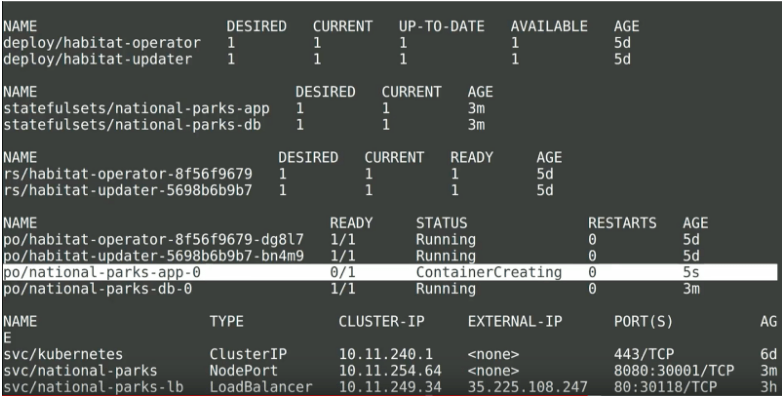

Then, our GKE cluster looked like this:

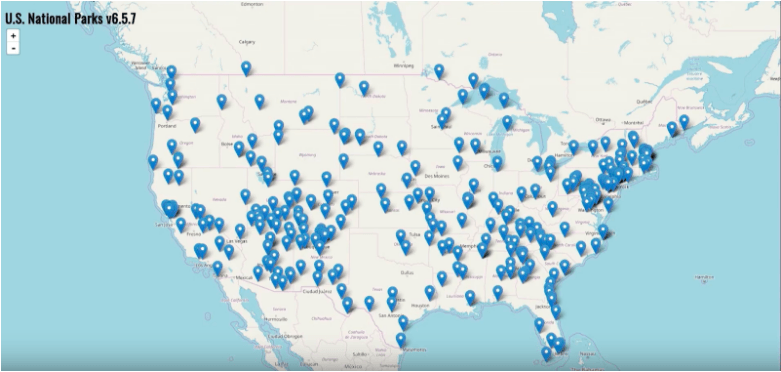

And we could see the running app by heading to the IP address of the GKE service:

So at this point we could easily demo creating a new deployment of the National Parks app – but the real magic would be creating a change to the app and seeing it seamlessly roll through the entire pipeline.

Prior to the demo, I created and stashed some style changes to the National Parks app (which would show up well on a projection screen).

During the demo, I took those files out of the stash

Then committed these changes to the national-parks github repo.

$ git add . $ git commit -m ‘new styles’ $ git push origin master

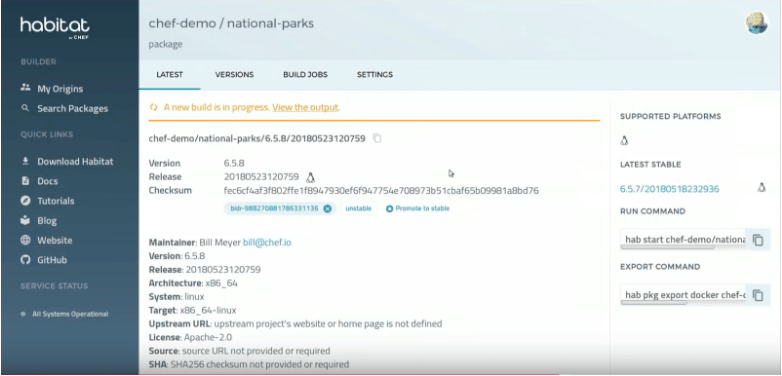

We had previously connected this github repo to the public Builder. Therefore, any changes pushed to the master branch would automatically trigger a new build on Builder.

We had also previously connected our Builder repo to Docker Hub – as soon as a new build was completed, it automatically pushed a new Docker Container Image of our app to Docker Hub.

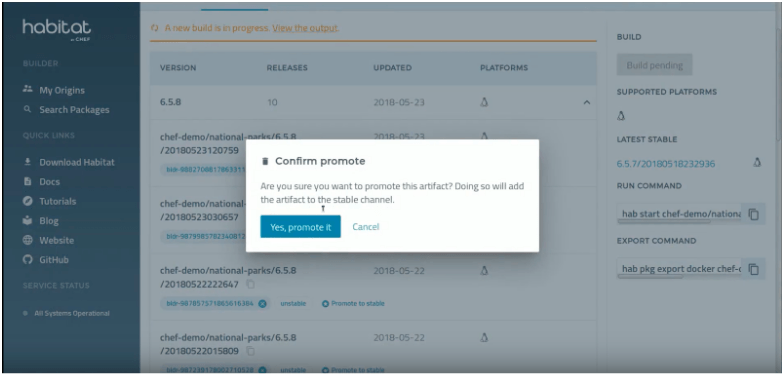

Now, all that was left to do was to promote the new build to the stable channel of Builder:

Now the the Habitat Updater came into play. The purpose of the Habitat Updater is to query Builder for new stable versions of Habitat packages deployed within the cluster. Every 60 seconds, the Updater queries builder and, should there be an updated package, it pulls the Docker Container Image for that package from Docker Hub and re-creates the containers running that image.

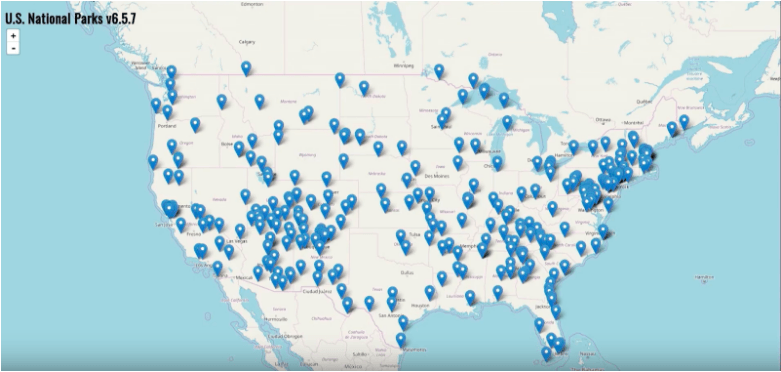

And then all we had to do was revisit the ip address of the load balancer and we could see our changes live!

The whole purpose of this demo was to showcase the magic of Habitat and Kubernetes. Based on reactions to the demo, we succeeded!

Acknowledgements

Although I had the privilege of running the demo onstage, credit for creating this demo must also be shared with Fletcher Nichols and Elliot Davis – two of my fellow Habitat core team members. It takes a village to make a demo and I am so lucky to have the Habitat core team as my village.

Get started with Habitat

- Download and set up Habitat in minutes

- Choose a topic to begin learning how Habitat can help your team build, deploy, and manage all of your applications – both new and legacy – in a cloud-native way.

- Join the Habitat Community Slack